I want to shed light on the often unnoted role of Docker as a build environment in larger and legacy applications that regularly must be maintained and extended. In particular, I want to highlight Docker’s role during the build process.

This aspect, in my opinion, doesn’t receive the attention it deserves since Docker is increasingly used to run applications due to the boost in productivity it provides.

The build tool

Every major technology has a set of build tools that automate tasks around the actual coding such as downloading dependencies, compilation, or bundling. Generally speaking, you have a major build tool that provides a workflow and plugins that execute the specific tasks.

At the start of a project, developers don’t have a problem installing build tools on their own. The tools are just a handful of programs without any required customisation. For example, Java gives you choices like gradle or maven, .NET primarily MSBuild, and JavaScript webpack or gulp.

How build tools become build environments

That simplicity changes over the course of time as your build tool grows to the point you can’t simply define it as a tool anymore. Two main reasons drive this transformation:

- Increased complexity

- Legacy technology

Things get more complicated as you add new tools, customise existing tools, you change your operating system’s settings, and so on. Furthermore, you will face tasks where you can’t find an existing tool to automate so you have to write it on your own.

As an application runs successfully for many years, chances are quite high that it passes through the rise and fall of generations of technologies. Quite often, parts of the application - as well as the build tools - are based on outdated technology since porting the whole application is not always possible.

What began as a handful of programs becomes more complex, and uses different technologies, until it evolves into a collection of tools - and even hacks - that I call a build environment.

Build environment as the problem child

Build environments have little tendency to change once they have been installed on a developer’s machine. The developers are just happy that it works and, following the maxim “never change a running system”, won’t touch it at all.

It’s no wonder that, over time, knowledge about each tool fades and no maintenance gets done.

Ultimately, this hinders the adoption of new technologies.

Professional software developers should be allowed to setup their own development environment. By that you encourage them to try new things and be innovative. Eventually, however, the build environment becomes so complex that each developer gets a copy of a virtual machine or a machine setup by a configuration management tool like Ansible.

This is the moment where you should realise that something is wrong.

Running build environments in Docker

As we’ve seen, build tools grow because of complexity and legacy. They end up in a build environment that is hard to maintain and extend. It can even lead to situations that put heavy constraints on the development environment, diminishing the developers’ freedom of action.

This is the moment where Docker enters the stage. It lets us put complete applications into containers that we execute on any machine that has the Docker runtime installed. This eliminates repetitive administrative tasks such as configuration and installation.

The whole build environment can be packed into a single Docker container, a Dockerfile, within which the complete installation and configuration must happen. The Dockerfile is, roughly, a collection of commands executed during the creation of the Docker image. This forces us to fully automate our build environment since no manual tasks are possible.

A Docker-based approach guarantees that each developer has the same build environment, which is also true for your CI. There is no possibility for “works on my machine syndrome” caused by bugs due to different builds. Also, a Docker container can start immediately on each developer’s machine. By adding a shell script that runs the container with the appropriate parameters, new developers can start working on the codebase even on their first day.

Docker build environments increase productivity

Of course Docker can’t remove the burden of complexity and legacy, but it makes things easier.

Containers have no boot-up time so execution starts immediately. That makes containerisation faster than virtualised images like VirtualBox, VMware and much faster than physical machines.

You can reuse your existing configuration management tools like Ansible or Puppet and, since Dockerfile is in version control, you can try new things effortlessly.

There is also a huge improvement for the developer experience. Docker’s capability to share directories with the host system lets our developers use any IDE they want since modern IDEs are already equipped with Docker support. Without the need to sync source files, they just write the code as they are used to and trigger the creation of the build.

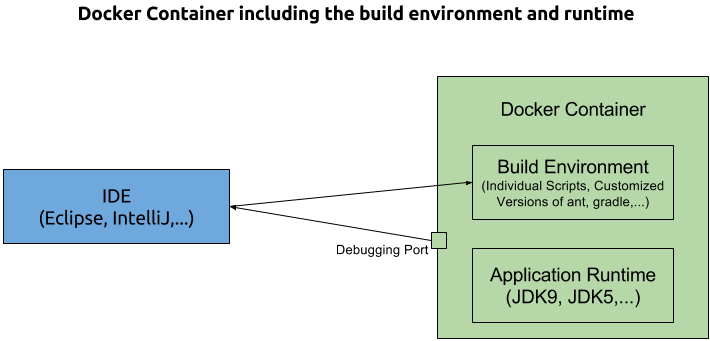

If your technology allows debugging via network (Java does), then this is also possible with Docker. The following drawing shows the principle for a Java-related scenario.

Show Case

Since each build environment has its own individual character, I only show a very minimalistic example of an application that runs Spring version 1.0 and uses ant as a build tool. This constellation dates back to 2004.

I leave it to your imagination how the build environment could have evolved over such a long period of time. My main intention is to give a starting point to catch the general idea.

The complete project is available on GitHub. Please note that the Dockerfile uses the new multi-stage build feature introduced with version 17.06 CE:

FROM java:6 as builder ADD . /data RUN wget http://archive.apache.org/dist/ant/binaries/apache-ant-1.6.3-bin.tar.bz2 RUN tar xfj apache-ant-1.6.3-bin.tar.bz2 RUN /apache-ant-1.6.3/bin/ant -buildfile data/build.xml jar FROM java:6 COPY --from=builder /data/build/legacy.jar /legacy.jar CMD ["java", "-jar", "/legacy.jar"]